Museums and cultural institutions have started releasing their catalog metadata under more liberal licenses recently. Culturally this is a big change for them because traditionally they are hoarders and "guardians of the truth," so it's hard for them to give up control.

There are enormous benefits to sharing their metadata though and, as they're gradually coming to realize, they'll receive back more than they give. One of the first museums to do this was the

Powerhouse Museum who's

collection metadata was used as an example for the

Free Your Metadata project.

Two big benefits of having your metadata publicly available are that 1) it can be linked to other sources to find interesting connections in other collections, archives, etc and 2) you can get other people to help you clean it up and correct it (bonus!).

The

Cooper-Hewitt National Design Museum, part of the Smithsonian, is one of the latest to make its

collection data available online for anyone to reuse under a CC0 license. A couple of weeks ago they released a concordance of their

people matched up with entries in other data sets such as Wikipedia, Freebase, the Library of Congress Name Authority File, etc.

They describe it as a pre-alpha release (we'll see why in a minute) and explicitly ask for help in cleaning it up, so let's take a look at how we might help out as volunteer citizen metadata curators.

The looking at the

people, we can see that it include "corporate persons" (e.g. IBM) and has not only artists, but clients, manufacturers and anyone who might have a role in the creation or provenance of a design artefact. There are 4,869 entries in their database, but the concordance only contains the 1,507 which have been matched against Wikipedia. There are 725 links to Freebase, 222 to the Museum of Modern Art, 113 to the Virtual International Authority File (VIAF, a union catalog of authors by the world's libraries), etc.

Let's look at an example page on their web site. The page for

Frederic Edwin Church includes links to both Wikipedia and Freebase. If we follow the

Freebase link, we see that it has additional links including not only Wikipedia, but the Library of Congress, New York Times, VIAF, etc. It also has a large amount of structured data in both human and machine-readable form including his works, exhibitions of his work, books about him, his teacher and a student. It is this network of data that we're connecting to when we attempt to link Cooper-Hewitt entries to this web of links.

Down to work! We're going to use Refine (aka Google Refine née Freebase Gridworks). You can download it for Windows, Mac, or Linux

here. When you start it, it will open a page in your web browser where you can create a new project. We're working with public data here, but it's a desktop app and all the data stays local, so if we were working on private data, we wouldn't need to worry about it escaping into the cloud. Create a new project from the

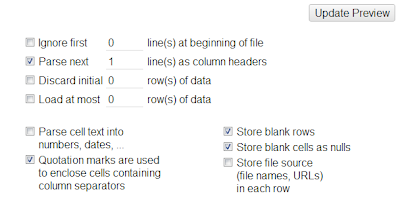

CSV file and turn off

Parse cell text into numbers, dates, ... so that the identifiers with leading zeros don't have them changed.

Let's do a quick analysis of what columns are populated. The spreadsheet uses the signal value 0 in many of the columns to indicate something is which doesn't have an ID, but uses blank for this in the Freebase column Let's get convert all the 0s to blanks to make them the same. Select

Edit for the first cell in the

viaf:id column and delete the 0, then click

Apply to All Identical Cells. All the 0s in the column are gone! Repeat the process for the other columns.

We'll look up the Wikipedia IDs in Freebase to see what they refer to. Because Freebase includes all the Wikipedia IDs, we can do the lookup by ID instead of name. Here's how:

- Select Reconcile->Start reconciling...

- Choose Freebase Query-based Reconciliation

- select the a Freebase key in option

- select the this namespace option

- fill in the namespace identifier /wikipedia/en_id which represents the numeric identifier associated with the English Wikipedia article

When everything is filled in, click

Start Reconciling. In less than a minute, you'll have all 1507 IDs linked to their corresponding Freebase topic. There is another namespace with the ID

/wikipedia/en which uses the title from the Wikipedia URL if you ever need to match that way. Very handy for enhancing someones list of Wikipedia links with additional information!'

There are now two new facets on the left side of the screen. Looking at the facet labeled

wikipedia:id judgement, we can see that not all IDs were matched up. If we click

none to select only those entries which didn't match and check out a few of them on the Cooper-Hewitt site, we see that they are things like disambiguation pages, redirects, etc. Freebase doesn't include these because they don't represent a single thing. We don't know for sure, but perhaps the matching process used by the museum only took into account name matches without checking the type of the thing being matched.

Let's delete all these bad matches. It's possible that the correct link is on that disambiguation page and could be found by a human, but for our purposes it's easier to just put all of these back in the

to-b-processed bin and deal with them later.

- Make sure the none entry is selected in the wikipedia:id judgement facet and nothing is selected in any other facet

- in the wikipedia:id menu select Edit cells->Common Transforms->Blank out cells

As a sanity check, let's make sure that the given Freebase IDs all match their corresponding Wikipedia IDs. Now that our

wikipedia:id column is linked to Freebase, we can look up any other value that Freebase knows. Let's add a column containing the Freebase Machine ID.

- Select Edit column -> Add columns from Freebase...

- In the Add property input field type Machine ID and choose /type/object/mid

- confirm that the preview display shows the MIDs and then click OK

Now we'll configure our facets so that we can see if the values in our newly added column match those that existed in the concordance.

- In the freebase:id column menu, choose Facet->Customized Facets->Facet by blank

- Select false in the newly created facet (ie non-blank values)

- In same column, choose Facet->Custom Text Facet... and use the expression: grel:value==cells['Machine ID'].value

We see that all 782 non-blank values match. So far, so good. Click Remove All to clear the facets.

You may have noticed that we gained an extra 263 rows when we added the new column. In Freebase a topic can have multiple MIDs if it was merged during its lifetime. This allows existing references to continue to work. It doesn't matter which one we pick and since Cooper-Hewitt appears to have chosen the first one, we'll delete the extra rows with others.

- Select (blank) in the wikipedia:id judgement facet. This column was fully populated before, so this selects all the new rows.

- In the All column menu choose Edit rows-Remove all matching rows

Now let's add a some value. There are 462 entries which have Wikipedia IDs, but no Freebase ID. Let's fill those in.

- Select Edit cells->Transform... from the column menu for the freebase:id column

- Use the expression cells['Machine ID'].value

- After confirming the results we can remove the Machine ID column Edit column->Remove this column

As a sanity check, let's see if the names of the Freebase topics match the names in the ch:name column. We'll use a Custom Text facet on the wikipedia:id column (which has been reconciled against Freebase) with the expression: cell.recon.match.name.unescape('html')==cells['ch:name'].value

to compare the values in the two columns. This takes the name of the matched Freebase topic, unescapes the HTML entities (a workaround for a display quirk) and compares the result to the value in the other column. We've got 898 exact matches, 346 mismatches, and 263 topics which aren't in Freebase for some reason.

If we take a look at the mismatches, we can see a variety of types of differences. There's

Création Baumann for

Création Baumann AG and

Honeywell instead of

Honeywell, Inc. because the Freebase standard is to not include suffixes like Inc., Co., AG, SARL, etc. There are minor capitalization differences like

Jean le Pautre for

Jean Le Pautre and variant spellings like

Wenceslas Hollar instead of

Wenceslaus Hollar.

Other differences seem more significant and probably deserve closer review. For example,

Henry Dreyfuss Associates clearly isn't the same thing as

Henry Dreyfuss himself (although in the world of Wikipedia they could easily be discussed in the same article). Ditto

Chermayeff & Geismar as compared to

Ivan Chermayeff.

One way to tackle this is to attempt to reconcile the 600 or so mismatches and non-matches to see if we can match them up with a Freebase topic. We'll just use the name as given by Cooper-Hewitt and see what we get for matches. We can then compare the two sets of IDs to see where the problems lie.

- In the wikipedia:id judgement facet, select both false and (error) (click include in the hover menu to select the second entry)

- In the ch:name column, choose Reconcile->Start reconciling

- Select the Freebase Reconciliation Service

- Select no particular type (another possible strategy would be to first reconcile against Visual Artist then Organization, etc, but we'll take our chances and try to do them all at once)

When we're reconciling against Freebase, we may occasionally run across duplicates such as

Edward Fella and

Ed Fella. We can flag these to be merged on Freebase. This will queue them up for voting by the Freebase community and as soon as three people agree, the merge will be processed. Of course, we have to make sure that we don't confuse something like the book

Edward Fella with the person.

Freebase isn't limited to topics which have articles in Wikipedia, so we'll find some additional matches such as

Ronan or

Erwan Bouroullec who have an entries with their Netflix and IMDB IDs because of their appearance in the movie

Objectified.

Freebase also allows us to create entries which might be missing. For example, there's an entry for Donald Deskey, but none for his firm Deskey-Volmer. We can not only

create it, but also link the new entry to both founders.

Of course the fact that the names match doesn't necessarily mean that we've got the correct entry. The singer

Beck didn't manufacter the lens for this

old camera and Elizabeth I wasn't the 12 year old who embroidered

this sampler in 1834. We've done enough for now, so we'll come back in a future installment and try to clean up more of this.

The step for now is to publish our improvements so that others can take advantage of them. We'll export our improved data as a CSV from Refine and commit it to our

fork on Github. If you're rather start from where I left off, feel free to clone my copy of the data and improve it some more.

In future posts, we'll look at some additional cleanup and enhancement strategies as well as examine some interesting things that we can do with the interconnections that have been created.

You can discuss and provide feedback on

Google+